Polling's New Problem: Partisan Non-Response

November 11, 2020

There used to be a world where polling involved calling people, applying classical statistical adjustments, and putting most of the emphasis on interpretation. Now you need voter files and proprietary first-party data and teams of machine learning engineers. It’s become a much harder problem.

David Shor, quoted in Vox.

Following up on one of my “snap observations” from my last post, it seems likely that one – perhaps the most important – source of the polling error this year was in non-response bias. David Shor, an independent polling analyst who worked on the Obama campaigns, claims that we are experiencing a partisan divide in social trust that is undermining polling results. In the past it was fairly easy to adjust for non-respondents using demographic information because there was no partisan difference between non-respondents and respondents.

This is not the “shy voter” issue, although it sounds like it. That theory claims that partisan voters lie to pollsters about who they vote for because they are afraid of some kind of social disapproval from the polling interviewers. This phenomenon, according to the claim, both overcounts support for Biden and undercounts support for Trump. It’s easily tested, unlike the partisan non-response problem, and Morning Consult did just that. Here’s how it works: since the claim is that the respondent is concerned about how the interviewer would react to hearing her support for Trump, she lies to the interviewer about who she is supporting (either Biden or some other response). So, if that is true we would expect to see different responses between live interviewer polling and automated (i.e., without a live interviewer) polling. Morning Consult found no significant differences in the results.

What Shor believes is happening is that a significantly higher number of Republicans refusing to respond to polls than Democrats – or more accurately, those voting Republican over those voting Democratic. He believes this problem was exacerbated by Covid, in which a lot more Democratic voters agreed to participate in surveys than Republicans.* This is not the shy voter problem (which, to be clear, does not appear to exist),** but partisan divide among those who agree to take a polling survey and those that do not agree to do so.

Shor is worth quoting at length on this problem:

For three cycles in a row, there’s been this consistent pattern of pollsters overestimating Democratic support in some states and underestimating support in other states. This has been pretty consistent. It happened in 2018. It happened in 2020. And the reason that’s happening is because the way that [pollsters] are doing polling right now just doesn’t work.

Poll Twitter tends to ascribe these mystical powers to these different pollsters. But they’re all doing very similar things. Fundamentally, every “high-quality public pollster” does random digit dialing. They call a bunch of random numbers, roughly 1 percent of people pick up the phone, and then they ask stuff like education, and age, and race, and gender, sometimes household size. And then they weight it up to the census, because the census says how many adults do all of those things. That works if people who answer surveys are the same as people who don’t, once you control for age and race and gender and all this other stuff.

But it turns out that people who answer surveys are really weird. They’re considerably more politically engaged than normal. … They also have higher levels of social trust. I use the General Social Survey’s question, which is, “Generally speaking, would you say that most people can be trusted or that you can’t be too careful in dealing with people?” The way the GSS works is they hire tons of people to go get in-person responses. They get a 70 percent response rate. We can basically believe what they say.

It turns out, in the GSS, that 70 percent of people say that people can’t be trusted. [emphasis added] And if you do phone surveys, and you weight, you will get that 50 percent of people say that people can be trusted. It’s a pretty massive gap. [Sociologist] Robert Putnam actually did some research on this, but people who don’t trust people and don’t trust institutions are way less likely to answer phone surveys. Unsurprising! This has always been true. It just used to not matter.

It used to be that once you control for age and race and gender and education, that people who trusted their neighbors basically voted the same as people who didn’t trust their neighbors. But then, starting in 2016, suddenly that shifted. If you look at white people without college education, high-trust non-college whites tended toward [Democrats], and low-trust non-college whites heavily turned against us. In 2016, we were polling this high-trust electorate, so we overestimated Clinton. These low-trust people still vote, even if they’re not answering these phone surveys.

What’s really interesting here is Shor’s reference to Putnam’s research and his conclusion that “people who don’t trust people and don’t trust institutions are way less likely to answer phone surveys.” Republicans used to trust American institutions, and in higher rates than Democrats at times, but in the past five years Trump’s repeated attacks on institutions and amplification of conspiracy theories has resonated with the GOP base. Even today, we hear from The Economist that 86% of Trump voters do not think Biden won the election legitimately – in the face of overwhelming evidence that Biden did in fact win and there that there is no evidence of widespread voter fraud.

Why is this important (to anyone besides professional pollsters)? In a general sense, all polls do is either help calm or exacerbate our anxieties about an election’s potential outcome. But, they do play an important role in resource allocation for political campaigns. Presumably, the partisan non-response problem is apparent in internal as well as public polling. But even so, many political operatives use public polling because their campaigns don’t have the resources to do the internal polling they need (not really a problem for presidential campaigns, but it can be for down-ballot campaigns) or as reality checks to their own internal polling. It was not just the public polling or Democratic internal polling that was subject to this error this year, it was true in the GOP internal polling that we know about.

This error in the polling reminds everyone of 2016, but not for the reason that the two elections are actually similar. Many people are arguing that the polls are “broken” or “can’t be trusted.” All that does is reinforce and encourage people to not respond to polling, which in turn will make the polling less reliable. What is interesting is that the error rate in the polling in 2020 appears to be similar to the rate in 2016. In that sense, we may actually be able to learn something from this that can help adjust future polling. The Upshot might have already started figuring out a way.

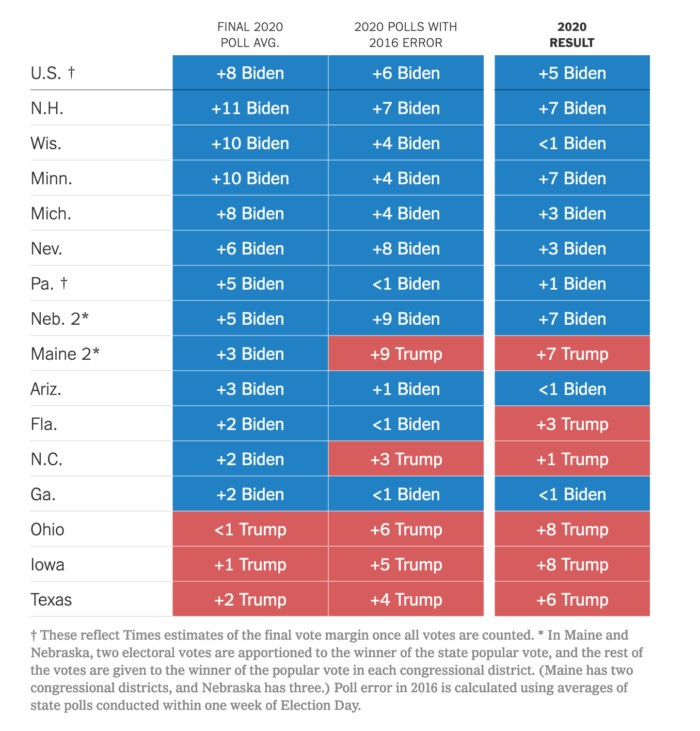

This year The Upshot, the New York Times’ polling and election operation run by Nate Cohn, did something new. Not only did it publish polling averages for each state, but it included an adjustment factor to show how each would look with a 2016 polling error and a 2012 polling error. Take a look at the table below.

The major outlier was Florida, which Trump won by a significantly bigger margin than the 2016 polling error adjustment would expect. Overall, however, this adjustment got the final 2020 polling averages very close to the actual 2020 result. So much so, that if the 2016 polling error adjusted averages were what The Upshot presented as “the” polling average for 2020 the entire polling community would be lauding them for getting it as correct as polling can get.

Overall, the polling was pretty good in 2018, but there were polling misses specific to certain areas of the country. In Florida, the polling overestimated Democratic support. In Nevada, it underestimated Democratic support. It would be interesting to see the 2018 polling error applied to the 2020 final polling averages. It would probably be less accurate than the 2016 error, but it might show us some important potential regional variation adjustments.

Shor seems to think that we are going to have to move towards modeling rather than polling. (To be honest, I am not sure what Shor is thinking about the future of polling. The interview is not clear about that.) While I have heard more than one person say “the smart people got it wrong” about this year’s polling, they have clearly not paid attention to the political science models, one of which – Sabato’s Crystal Ball – was nearly spot on. The success of Sabato’s model tells us that perhaps we should be paying more attention to the fundamentals and the expertise of serious political observers (I am not talking about pundits, just to be clear). Consider Sabato’s final map below.

The final projection was for Biden to win 321 electoral votes. He is likely to win 306. Sabato got every state correct except for North Carolina. North Carolina is very close and still not called, but it will likely go to Trump. If Biden does overtake Trump in NC, he will win 321 electoral votes. In contrast, based on polling FiveThirtyEight projected Biden to win 348 and The Upshot projected Biden to win 350. The Economist projected a large range of electoral vote probabilities for Biden, but forecast Biden to win 54.4% of the vote to Trump’s 45.6%. The actual 2020 popular vote margin will likely be 51-47. That’s not as big a miss as it appears, but it doesn’t tell us much about the call in each state.

The error seems to have been more pronounced in down ballot races than in the presidential race. It’s true that a lot of pollsters – particularly the high quality ones – had Biden with a much bigger lead than he ended up with. But, he still won. This means he survived the same polling error that Clinton did not in 2016. This is something that Nate Silver of FiveThirtyEight has noted as showing that the forecasts did have a measure of success this year.

Make no mistake, there was polling error this year that is very troubling for the future of polling. Shor may be right that we have entered a new political age of partisan non-response to polling. Maybe the political science model can show us a new way forward, maybe The Upshot has given us a hint on how we need to adjust the polling in this new environment, or maybe machine-learning is the future. Nevertheless, the forecasts this year projected Biden to win and he did win.

There is another thing we are going to have to get used to if mail voting continues to be used by a significant portion of the electorate: the order in which votes are counted by states. There was no uniform rule among the states this year in the order of the count. Some states, like Florida, counted mail and early vote first. Others, like Pennsylvania, counted them last. This made some people think Biden was winning big as predicted in some places (although the early vote was not as big for the Democratic ticket as many predicted) while it made others think Trump was winning states he would ultimately lose. Many people are influenced by initial reactions to Election Night returns and those impressions are sticky. In the end, the presidential race was closer than the polling suggested, but not by as much as many people think.

* According to Shor: ““Campaign pollsters can actually join survey takers to voter files, and starting in March, the percentage of our survey takers who were, say, ActBlue donors skyrocketed. The average social trust of respondents went up, core attitudes changed — basically, liberals just started taking surveys at really high rates. That’s what happened.”

** From his professional experience, Shor says he does not believe voters lie to pollsters once they have agreed to participate in the poll.